New Abstracts

Society of Research on Child Development

May 1-3, 2025 | Minneapolis, Minnesota

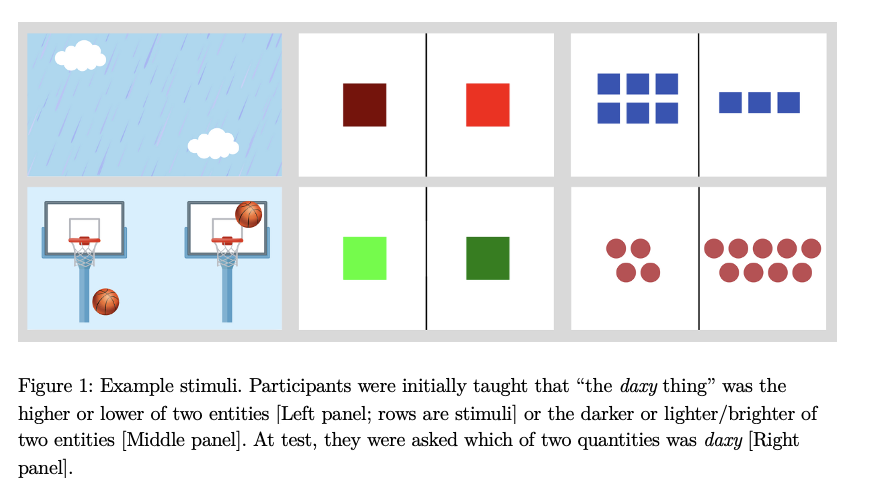

Children extend novel words from color to quantity just as systematically as from verticality

Arielle Belluck, Sarah Gemmell, Adele E Goldberg

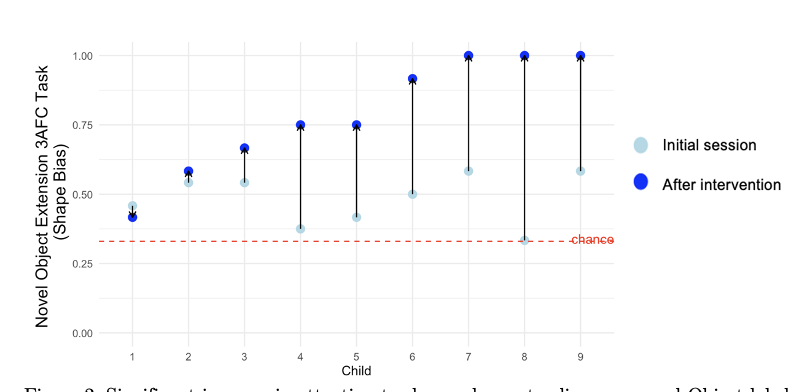

Autistic Children Learn the Shape Bias for Object Labels: Evidence from Noun and Adjective Tasks

Arielle Belluck, Momna Ahmed, Julia Nuygen & Adele E Goldberg

Significant increase in attention to shape when extending new novel Object labels after the intervention (dark blue) compared to before (light blue).

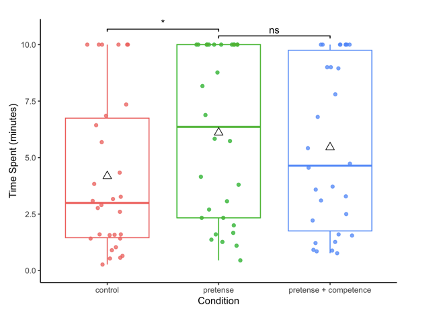

Pretend play improves children’s persistence regardless of character competence

Arielle Belluck & Adele E Goldberg

Time spent persisting by condition. * = p < .05

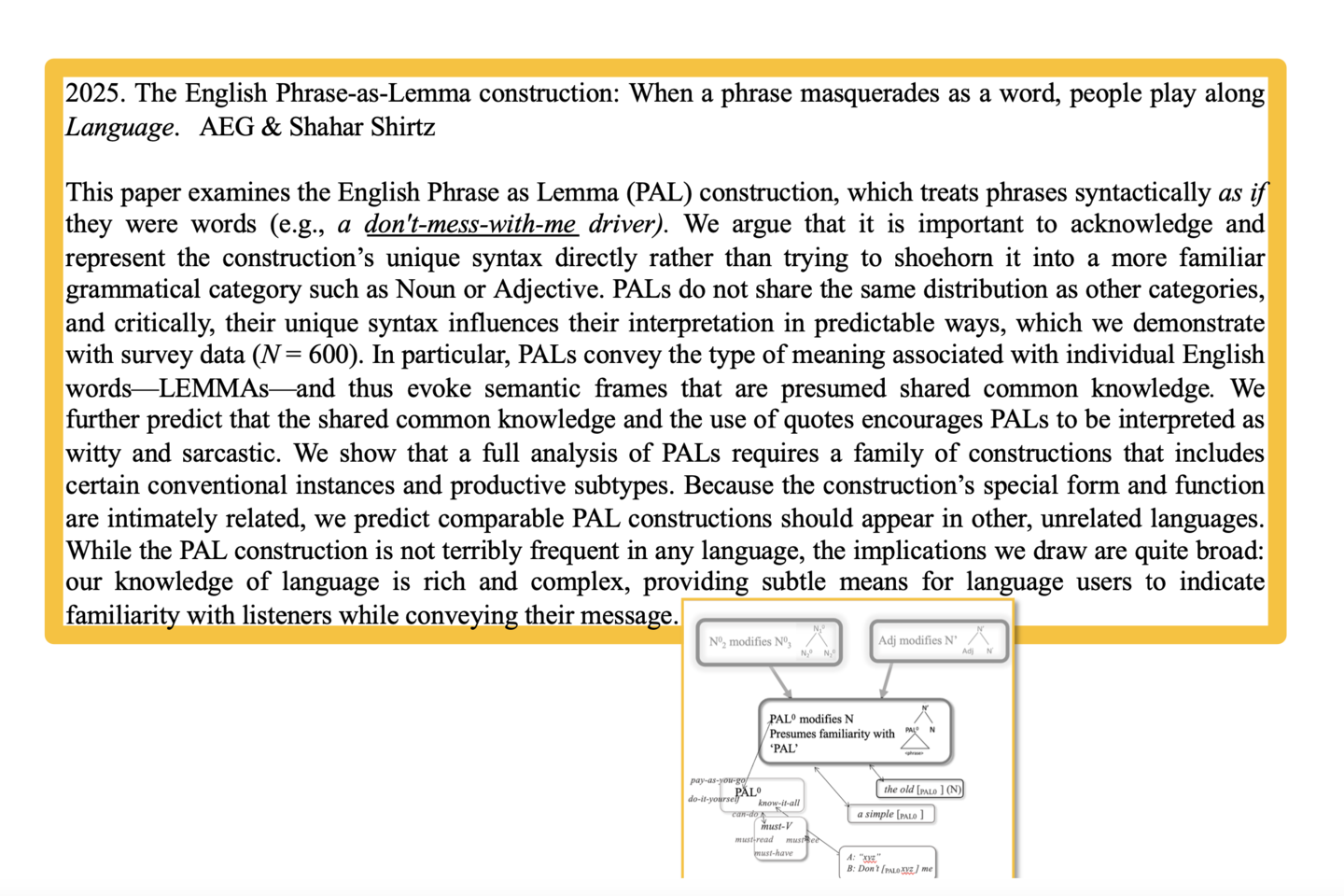

PAL image

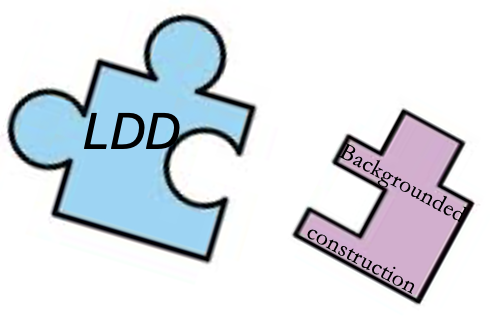

Constructions

"Island constraints" are predicted by conflicting functions of the constructions being combined

Autism and Language

The usage-based constructionist approach to language offers a clear perspective and suggests testable ways to mitigate the special challenges language learning presents to autistic individuals.

Conventional metaphors are more engaging than literal paraphrases or concrete descriptions.

Conventional metaphors (e.g., a firm grasp on an idea) are extremely common. We have discovered a new reason they are used so often: they are more engaging in that they evoke more focused attention than carefully matched literal paraphrases (e.g., a good understanding of an idea) or concrete descriptions (e.g., a firm grip on a doorknob). We initially discovered this accidentally by observing in an fMRI study that the amygdala—standardly used to indicate heightened engagement to tasks—was more active when participants passively read metaphorical sentences in the fMRI than when they read literal paraphrases that differed only by a single word (Citron & Goldberg, 2014). Since then, we’ve probed this discovery in a number of ways and have confirmed the effect, regardless of which conventional metaphors are used or whether they are included in longer passages or isolated sentences (Citron et al., 2016; Citron, Michaelis & Goldberg, 2020). In each study, sentences were normed and matched on complexity, plausibility, emotional valence, intensity, familiarity. Most recently, we measured pupil dilation in a light-controlled room as sentences were read aloud. As predicted, metaphorical sentences elicited greater event-evoked pupil dilation compared to literal paraphrases or concrete descriptions, beginning immediately and lasting seconds beyond the end of the sentences. We conclude that conventional metaphors are more engaging than literal paraphrases or concrete sentences in a way that is irreducible to difficulty or ease, amount of information, short-term lexical access, or downstream inferences.

A Chat about constructionist approaches and LLMs

Parallels between the usage-based constructionist perspective and LLMs

| PARALLELS | Usage-based constructionist approach to language | GPTChat, GPT4 & similar recent LLMs |

|---|---|---|

| Lossy Compression and Interpolation | Human brains represent the world imperfectly (lossy), with limited recourses (compressed); we generalize from familiar to related cases (via interpolation/coverage/induction) | Every model involves lossy compression and interpolation; all neural net models interpolate to generalize within the range of the training data. |

| Conform to Conventions | Humans display a natural inclination to conform to the conventions of their communities. | Pre-training to predict the next word requires that outputs conform to conventions in the input. |

| Complex Dynamic Network | Structured distributed representations at varying levels of complexity and abstract are learned from input + understanding of others’ intentions and real-world grounding; can be flexibly extended. | Structured distributed representations at varying levels of complexity and abstraction are learned from massive amounts of input text; can be flexibly extended. |

| Context-dependent Interpretations | Humans use linguistic and non-linguistic context for interpretation. | Only linguistic context is available, via a thousand words of preceding text. |

| Relationships among Discontinuous Elements | Made possible via working memory and attention. | Made possible by attention heads (Transformer models) |

| Goal is to be Helpful | Humans display natural tendency to be helpful to others in their communities. | Special training provided by InstructGPT taught GPTs to provide helpful responses. |